Absolutely not.

Absolutely not.

It’s a trap.

- A message from Canada

I see. Makes sense.

There’s a WIP VirtIO driver in a PR but it’s not done yet. VMware’s own VMSVGA is open source if I remember correctly. I wonder if they’ll adapt it to KVM and if they do, whether that’ll be usable in KVM without VMware.

If we get VirtIO 3D acceleration in Windows guests from this, I’d be really happy.

You could use a systemd unit file:

[Unit]

Description=docker_compose_systemd-sonarr

After=docker.service

Requires=docker.service

[Service]

TimeoutStartSec=0

WorkingDirectory=/var/lib/sonarr

ExecStartPre=-/usr/bin/docker compose kill --remove-orphans

ExecStartPre=-/usr/bin/docker compose down --remove-orphans

ExecStartPre=-/usr/bin/docker compose rm -f -s -v

ExecStartPre=-/usr/bin/docker compose pull

ExecStart=/usr/bin/docker compose up

Restart=always

RestartSec=30

[Install]

WantedBy=multi-user.target

You’d place your compose file in the working dir /var/lib/sonarr. Depending on what tag you’ve set for the image in the compose file, it would be autoupdated, or stay fixed. E.g. lscr.io/linuxserver/sonarr:latest would get autoupdated whereas lscr.io/linuxserver/sonarr:4.0.10 would keep the container at version 4.0.10. If you want to update from 4.0.10, you’d have to change it in the compose file.

And there are breaking changes in this Jellyfin release.

Sounds a bit like the drug dealer’s business model.

I think federated non-profit video platforms won’t work on large scale without P2P.

Not noticeable with always-on Tailscale with the default split-tunnel mode. That is when Tailscale is only used to access Tailscale machines and everything else is routed via the default route.

Yes. I’m using the standard typing system on an ANSI keyboard which requires pinkies.

The distance and the budget call for 75-85". I have had good luck with an entry level Sony. I bought an X85K 85" two years ago. It’s one step above the cheapest model. The cheapest model was an IPS panel which had a bit shittier performance. I don’t recall in what regard. X85K is a Samsung panel. It’s a standard no-nonsense LED side backlight which is very robust over time. The original unit was a lemon. It developed a line across the screen. I got it replaced under warranty. The second unit has been flawless so far. Software-wise, it’s a bog-standard Android TV/Google TV. Doesn’t require Internet connection to setup. With that said I use a CCwGTV with it and I don’t hear a peep from the TV OS about anything. The only thing I didn’t like about it was the price at the time. I bought it straight from Sony, they ship from GTA. It was available from other retailers too. I think this model might still be current actually. I still see it on Sony’s web along with another one - X85J.

Prior to that I had an entry level Samsung 75". It was fine too. Didn’t require Internet. The panel was a similar affair, slightly worse in terms of blacks and uniformity but I’m sure that wasn’t due to its Samsungness but was simply a cheaper panel than what Sony uses.

cross device syncing between desktop and phone

This is me

The VPN should keep access to the homelab even when the external IP changes. Assuming the VPN connects from the homelab to the cloud. The reverse proxy would use the VPN local IPs to connect to services.

If you’re switching low power inconsequential things like LED lights, they’re OK.

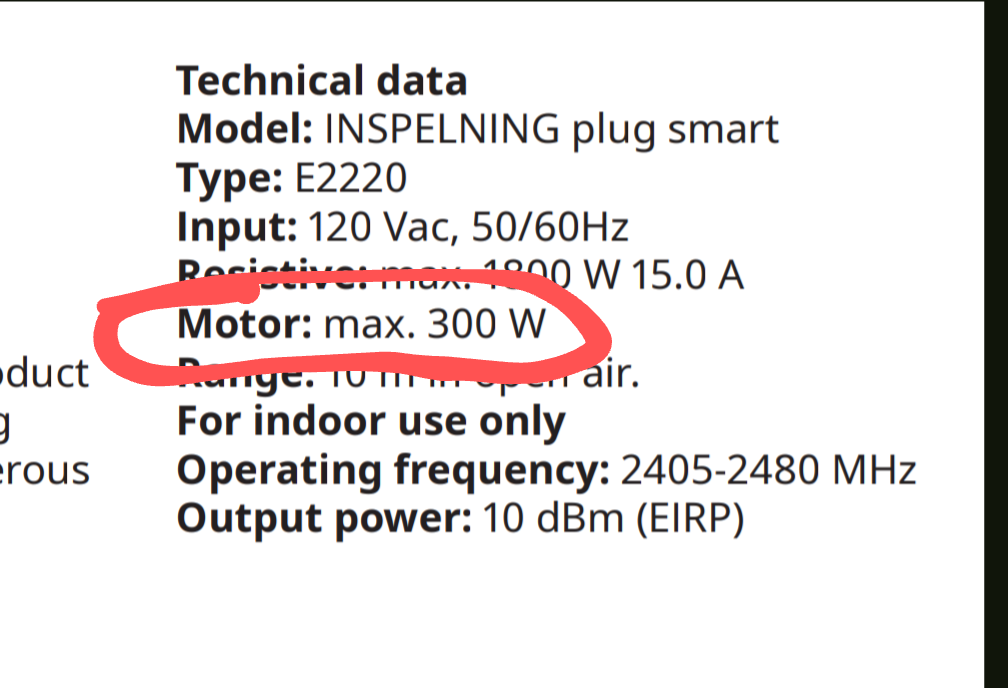

This like most plugs in this format is not for inductive loads so it can only handle 300W with such:

It might be OK if the AC units are small enough.

If you’re gonna be switching AC units, you likely want a plug that can switch inductive loads. Most can’t. Well they can but their relays crap out quickly. Here’s an example of a unit rated for inductive loads. It’s for NA and uses Z-wave so it’s not what you’re looking for. They explicitly call out it can be used for AC motors. Some units explicitly say they can’t be used for inductive loads but many don’t and you learn the hard way.

Do you mean via QEMU without hardware acceleration?

OK, so people can use the definition instead. In fact it might be more useful.